What Is Adaptive Experimentation? A Complete Guide for Students and Researchers

- Home

- Phd Research Methodology

- Adaptive Eexperimentation for Researchers

Research Problem

Recent Post

Introduction

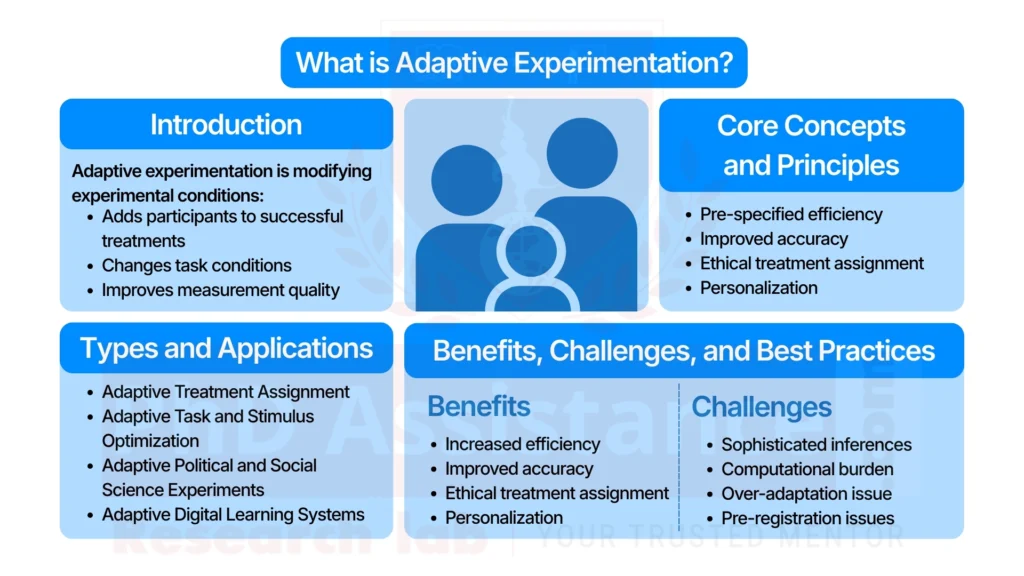

Adaptive experimentation for researchers is a term used for the research designs that change or make use of the experimental conditions in real time by relying on the data that is being collected. Unlike the traditional fixed experiments, the adaptive designs give the researcher the opportunity to learn during the whole process—adding more participants to the most successful treatments, changing the task conditions, or increasing the quality of the measurement during the study. According to Offer-Westort et al. (2021), adaptive experimentation promotes learning at a faster pace due to the presence of feedback loops, allowing the researchers to get conclusions quicker than with the static designs.

It has quickly grown from a niche purely in the domain of statistics and biomedical science to an everyday practice in psychology, political science, public policy, neuroscience, and, lastly, the area of education. Some recent examples of the application of adaptive methods to decision-making in policy trial (Kasy & Sautmann, 2021), computational modelling (Kwon et al., 2023), statistical inference (Hadad et al., 2021), and digital assessments (Zhang & Huang, 2024) range the enhancement of adaptive methods deep into the basics of these fields. The present guide is intended to be a handy starting point for students and researchers who are embarking on their first adaptive studies.

Core Concepts and Principles of Adaptive Experimentation

Essentially, adaptive experimentation involves the use of iterative decision rules at its very core which modifies the experimental setup such as one in which the participants are treated, the challenge level of the stimulus, or the likelihood of being chosen for sampling depending on how the experiment is doing at that moment. According to Marschner (2021), these kinds of studies have their results being decided by algorithms laid down in advance, not by coming up with arbitrary researcher decisions, which is the reason why the results are considered valid and the process being transparent.

Key principles:

Pre-specified adaptation rules: It is a requirement for the researchers to decide beforehand what and when modifications will take place. For instance, a trial that is testing two treatments might quickly direct 70% of new subjects to the one that shows the best result after the first 100 observations.

Sequential learning: The data for the experiment undergo constant analysis by the researchers. This allows the researchers to continually update their estimates as in Bayesian adaptive trials.

Efficiency and moral advantage: With the implementation of adaptive allocation, it means that the number of people exposed to the useless treatment is significantly reduced (Kasy & Sautmann, 2021). For instance, in research that looks into penalties for school attendance financially, the adaptive setup may permit more students to be allocated to the category with a positive influence thus, the undeserved treatment’s exposure would be minimized.

Complex inference challenges: The continuous change in the probabilities of assignment makes it impossible to use the standard confidence interval methods. Hadad et al. (2021) put forward a collection of innovative techniques that offer interval estimation for adaptive trials, thus opening the way for rigorous statistical inference.

Types and Applications of Adaptive Designs

Adaptive experimentation for researchers is a very versatile technique, which can be applied in many different sectors, from improving people’s conduct to measuring the impact of government policies.

• Adaptive Treatment Assignment

Kasy and Sautmann (2021) support the use of algorithms, such as Thompson sampling and bandit approaches, that let researchers continuously distribute subjects among treatment modalities with higher success probability as per the predictions.

Example: A mental health researcher evaluating three different apps might end up directing a greater number of patients to the app that at the moment is showing the greatest reduction in anxiety scores.

• Adaptive Task and Stimulus Optimization

Kwon et al. (2023) put forward “adaptive design optimization” as a new way of enhancing computational fingerprinting in cognitive neuroscience. The system is able to extract more diagnostic information from fewer trials by adjusting the parameters of the task in real-time (e.g., difficulty, timing, feedback schedules).

Example: In a decision-making experiment, the system might present more difficult trials to the participant when he/she is performing well, thus generating richer behavioural signatures.

• Adaptive Political and Social Science Experiments

Offer-Westort et al. (2021) illustrate political science-related uses, for instance, manipulating the message framing or reallocating resources according to the initial persuasion results.

Example: An electoral campaign trial might make the engagement-stronger message the main one for a larger group of participants, which would lead to higher efficiency.

• Adaptive Digital Learning Systems

Zhang and Huang (2024) indicate that the interaction between learners and gamified platform for adaptive experimentation results in different levels of difficulty and kinds of feedback being provided as per the performance of individual learners.

Example: A reading comprehension tool can each time raise or lower its complexity depending on the learner’s ability, thus shaping customized learning curves.

Benefits, Challenges, and Best Practices

Benefits

1. Increased efficiency:

Adaptive designs draw conclusions drawing on fewer participants or trials (Offer-Westort et al., 2021).

2. Improved accuracy:

Adaptive optimization increases model fit and quality of data (Kwon et al., 2023).

3. Ethical treatment assignment:

Fewer participants are assigned an ineffective intervention (Kasy & Sautmann, 2021).

4. Personalization:

Digital platforms for adaptive experimentation can individually tailor assessments and tasks to unique users (Zhang & Huang, 2024).

Challenges

1. Sophisticated inferences: Adaptive sampling brings bias with mis-specification. Hadad et al. (2021) present methods to construct valid confidence intervals.

2. Computational burden: Adaptive algorithms need to simulate, optimize, and often involve Bayesian computation.

3. Over-adaptation issue: Marschner (2021) warns that excessive adaptations could fragment the data or increase the early noise.

4. Pre-registration issues: Researchers must be explicit and transparent in specifying the adaptions.

Best Practices for Researchers

- Register in advance all adaptation rules and decision points

• Through simulation studies, assess design performance prior to data collection

• Utilize appropriate statistical inference methods that are robust enough for adaptive sampling (Hadad et al., 2021)

• Be careful with early data monitoring and do not make ad-hoc changes

• Test tasks to gauge realistic parameters for optimization (Kwon et al., 2023)

Table:1 Key Insights and Applications of Adaptive Experimental Designs

Area | Key Insights (with citations) | Examples for Researchers |

Core Principles | Adaptive designs use predefined decision rules and sequential learning (Marschner, 2021). | Adjust trial difficulty based on participant performance in real time. |

Treatment Assignment | Algorithms like bandits and Bayesian updating improve efficiency (Kasy & Sautmann, 2021). | Assign more users to the mental health app showing early improvement. |

Statistical Inference | Standard CIs fail in adaptive settings; new methods required (Hadad et al., 2021). | Use adjusted confidence intervals when probabilities change mid-study. |

Optimization & Modelling | Adaptive design optimization increases precision in cognitive tasks (Kwon et al., 2023). | Increase task difficulty automatically to extract richer behavioural data. |

Applications in Research & Education | Effective in political science, policy research, and gamified learning (Offer-Westort et al., 2021; Zhang & Huang, 2024). | Modify message frames or learning tasks based on real-time participant responses. |

Future Directions and Implications for Research

The use of adaptive experimentation is going to have a very fast impact on research fields that are not only data driven but also one of the quickest learning ones. In political science, the adaptive message testing technique can facilitate the communication studies on the mass scale (Offer-Westort et al., 2021). In the field of policy research, the method of adaptive treatment assignment will serve as a major support for the decision-making process in the education, health care, and welfare sectors (Kasy & Sautmann, 2021). In cognitive neuroscience, adaptive optimization yields better results in accuracy for diagnosis made on the basis of computational models (Kwon et al., 2023). The education sector is transitioning to the use of adaptive assessments that offer personalized feedback to students on a large scale (Zhang & Huang, 2024).

Researchers who work in the field of adaptive methods would require very good computational knowledge, experience with sequential decision-making and knowledge of the latest inferential tools. However, adaptive experimentation for researchers is the method that opens up the whole range of possibilities to perform scientific discovery in an ethical way, efficiently, and with much more power than before—it is the one that continuously learns from data and changes the way experiments are designed.

References

- Hadad, V., Hirshberg, D. A., Zhan, R., Wager, S., & Athey, S. (2021). Confidence intervals for policy evaluation in adaptive experiments. Proceedings of the National Academy of Sciences, 118(15), e2014602118.

- Kasy, M., & Sautmann, A. (2021). Adaptive treatment assignment in experiments for policy choice. Econometrica, 89(1), 113–132.

- Kwon, M., Lee, S. H., & Ahn, W. Y. (2023). Adaptive design optimization as a promising tool for reliable and efficient computational fingerprinting. Biological Psychiatry: Cognitive Neuroscience and Neuroimaging, 8(8), 798–804.

- Marschner, I. C. (2021). A general framework for the analysis of adaptive experiments. Statistical Science, 36(3), 465–492.

- Offer‐Westort, M., Coppock, A., & Green, D. P. (2021). Adaptive experimental design: Prospects and applications in political science. American Journal of Political Science, 65(4), 826–844.

- Zhang, Z., & Huang, X. (2024). Exploring the impact of the adaptive gamified assessment on learners in blended learning. Education and Information Technologies, 29(16), 21869–21889.