How a Strong Conceptual Framework Shapes Dissertation Success in Computer Science

How a Strong Conceptual Framework Shapes Dissertation Success in Computer Science

- Home

- Academy

- Research-Methodology

- Conceptual Framework Shapes Dissertation Success in Computer Science

Conceptual Framework Shapes Dissertation Success

- Role of Conceptual Frameworks in Research Design

- Defining Scope and Delimitations

- A Contemporary Conceptual Model for AI System Development

- Conceptualisation and Theoretical Foundation

- Framework Characteristics and Components

- Value and Application

- Linking Literature to Research Gaps

- Practical Applications in Computer Science

- Challenges and Recommendations

- Recommendations

- Conclusion

- Reference

Recent Post

Introduction

The conceptual framework underpins the intellectual aspect of dissertation research and provides a systematic approach to link theoretical principles to research/variables/methodological choices. In the field of computer science, which typically requires complicated algorithms, data-driven systems and human–AI interactions, a conceptual framework provides clarity, coherence, and rigour (Lynch, Ramjan, Glew, & Salamonson, 2025).

This article assesses the purpose and value of using a conceptual framework in computer science dissertations and illustrates the nature and relevance of this framework through research-based examples of big data visualisation, predictive maintenance, and artificial intelligence (AI).

Understanding Conceptual Frameworks

A conceptual framework is a purposeful depiction of how key concepts link to each other and the importance of each relationship based on theory and scientific evidence. In other words, though a theoretical framework arises from a predetermined theory, a conceptual framework synthesises multiple areas of knowledge explaining how and why a system, algorithm, or process should work (Salawu, Shamsuddin, Bolatitio, & Masibo, 2023).

Example: Illustrating the nature of a conceptual framework involved a Framework for Big Data Visualisation developed by Jim Samuel (2020). Samuel (2020) prepared a conceptual framework of the role of AI, cognition, and data presentation in conceptualising a layered approach to visualisation of data in ways that allowed AI-assisted pattern discovery combined with human sensory understanding to facilitate user experience with data (Samuel, 2020).

This demonstrates a critical conceptual framework, specifically noting that it is not solely defining variables, but rather depicting interacting mechanisms and theoretical logic, establishing a technical design notion rooted in cognitive and analytical theory.

Role of Conceptual Frameworks in Research Design

A conceptual framework is one of the most necessary parts of the research design that acts as the path for the researcher to make methodological choices and gather data that are all in sync with the research questions. A conceptual framework may aid in showing how the independent and dependent variables are possibly related, as well as listing the mediating or moderating variables that might be present.

Example:

Arena et al. (2024) came up with a conceptual framework that was meant to take care of the decision-making process regarding the choice of machine-learning algorithms for predictive maintenance (PdM). In their model, the writers gave a lot of thought to the different decision factors, such as dataset characteristics, learning objectives, interpretability, and accuracy requirements, and thus gave researchers a map to point out the most suitable ML algorithm for industrial use (Arena et al., 2024).

Defining Scope and Delimitations

Conceptual frameworks help define the limits of inquiry and keep researchers focused and on-task. For example, the Big Data Visualisation Framework (Samuel, 2020), depicts the boundaries between the back-end computation (which is data-intensive and done by AI) and the interpretive layers of human-centred action in the organisation of the study.

This provides clarity of scope for researchers while shipping the study with clear beginnings and endings. Likewise, a conceptual framework related to a dissertation on cybersecurity may choose to focus strictly on threat detection in IoT networks rather than threat detection and response, or even an area of research like designing cryptographic algorithms, which is not remotely related, even though designing cryptographic algorithms may deepen the work.

People–Process–Data–Technology (2PDT) Framework: A Contemporary Conceptual Model for AI System Development

One of the notable recent developments in the area of conceptual modelling within the AI and information systems (IS), unlike our definition of conceptual models, we wish to prepare this literature review to examine the People–Process–Data–Technology (2PDT) Framework. The 2PDT Framework was developed through analysis of key IS theory (Hevner et al., 2004); Jessup & Valacich, 2008); Larsen & Eargle, 2015)) and current literature, is proposed as a design-and-action–oriented theory (Gregor, 2006; Gregor & Jones, 2007) for enhancing artificial intelligence system development (AI SD) outcomes.

The 2PDT Framework represents a contemporary expansion of classical IS approaches by incorporating four interdependent constructs – People, Process, Data and Technology – into a holistic organising framework for evaluating and informing AI-focused organisational initiatives (Monshizada, Sarbazhosseini, & Mohammadian, 2021).

Conceptualisation and Theoretical Foundation

The 2PDT framework was formed through a design science research (DSR) perspective (Hevner et al., 2004), relying on both established IS theory and new theoretical contributions—this method is encouraged as part of the design science research methodology in the IS community for producing “good theory” that generates value and practice (Lee, 2001; Weber, 2003). According to Gregor’s (2006) taxonomy of theory types, 2PDT fits the Type V: Design and Action theory description, given that it contains explicit ways to improve AI SD performance, instead of simply describing or predicting phenomena.

The conceptualisation process relied on a systematic literature review to identify the key failure points in AI organisational projects, including skill shortages, fragmented data, and misalignment of processes (Berente et al., 2021; Sun & Medaglia, 2019). Research studies were used to conceptualise a framework that assists organisations to circumvent these failure points by leveraging integration, standardisation, and continuous improvement, in the context of AI SD.

Framework Characteristics and Components

The 2PDT framework consists of four key dimensions:

- People – Reference to all human factors such as expertise, partnerships, leadership, and ethics (Jessup & Valacich, 2008; Lyytinen & Newman, 2008).

- Process – Reference to workflows within the organisation and the development process, which allow agility, transparency, and quality assurance (Hevner et al., 2004).

- Data – Reference to the data’s integrity, accessibility, and governance systems that underpin trustworthy AI performance (Rezazade Mehrizi, Rodon Modol, & Nezhad, 2019).

- Technology – Reference to the tools, systems, and AI algorithms that help develop and implement given systems (Mantei & Teorey, 1989; Valacich, George, & Hoffer, 2004).

In contrast to the traditional System Development Life Cycle (SDLC), which defines a definition around phases of system design that occur sequentially, the 2PDT framework represents an iterative and evaluative approach article that can be implemented at any phase of an AI system’s maturity, from ideation through implementation. This enables its agile, rigorous and evolutionary approach. The completeness of the framework arises from a combination of theoretical (or conceptual) principles and empirical findings from Artificial Intelligence domain experts.

Value and Application

The practical investigation of the 2PDT framework showed that its implementation by the organisations results in the better alignment of the four key elements: people, process, data, and technology, which then consequently brings about an increase in AI project success rates (Monshizada et al., 2021).

Despite being earmarked primarily for AI system development, the framework’s overall structure allows it to be deployed in other complicated areas—namely, predictive analytics, cybersecurity, and software engineering—where different dependent systems are more or less dynamically interacting. To conclude, the 2PDT conceptual framework epitomises the moment when the merging of theory, evidence, and practice produces the models that are not only scholarly but also practically impactful through the computer science discipline.

Linking Literature to Research Gaps

Creating a conceptual framework necessitates a comprehensive literature review, tying the current models to the unaddressed issues.

Example:

Podo, Ishmal, and Angelini (2024) introduced EvaLLM, a systematic theoretical framework for the appraisal of generative AI-based visualisations (Podo et al., 2024). The structure of their theoretical model breaks down the dimensions of evaluation into fundamental aspects, for example, consistency of prompts, generation based on grammar, and control of hallucination.

The significance of this research is that it demonstrates the way the conceptual frameworks unite the existing AI literature on large language models (LLMs) with the new performance metrics, thus making a connection between theory and application.

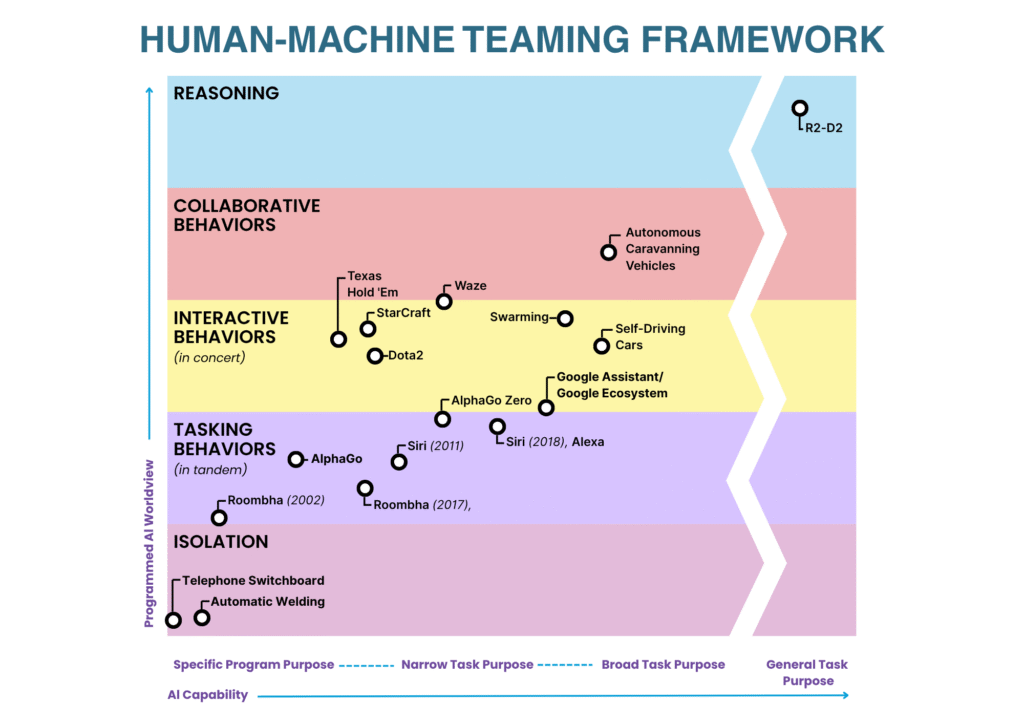

The other example is the human–machine teaming frameworks like the ones used in DARPA’s Three Waves of AI model and Grosz’s (1996) Collaborative Systems theory. These frameworks describe the transformation of AI from rule-based reasoning to machine-learning-driven adaptability, thus indicating how future AI systems, automation and human supervision should be regulated (DARPA, 2016; Grosz, 1996).

Practical Applications in Computer Science

1. Artificial Intelligence and Machine Learning

AI research frameworks determine and sharpen the interaction among learning methods, data quality, and evaluation metrics.

For instance, the Predictive Maintenance ML Framework of Arena et al. (2024) nicely illustrates the impact of decision variables (data attributes, model interpretability, and accuracy) on the choice of the algorithm.

Likewise, in the case of Generative AI, the EvaLLM framework of Podo et al. (2024), the paper delineates the interdependence of prompt design, output fidelity, and evaluation methods, thus offering a methodological way of investigating generative visualisation systems.

2. Big Data Visualisation and Cognitive Analytics

Jim Samuel’s (2020) conceptual framework for AI-augmented data visualisation suggests that the presentation layer—the way data is displayed to users—has a crucial role in human decision-making.

The framework combines behavioural data, machine learning, and visual cognition theory, thus providing a link between computer calculations and human understanding.

3. Human–Machine Teaming and Adaptive Systems

The evolution of AI studies (such as DARPA, IBM Broad AI, etc.) presents the conceptual schemes that demonstrate the co-evolution of machine learning, automation, and human collaboration. These models indicate that when AI starts exercising the higher-order cognitive functions, it requires more context awareness, human interaction, and team adaptability—important features in the conception of autonomous or semi-autonomous systems.

Adopted from JSTOR

The progression of AI research (e.g., DARPA, IBM Broad AI, etc.) illustrates the concept that machine learning, automation, and human collaboration have co-evolved.

The models show that AI, when it begins to implement the higher-order cognitive functions, then it requires the most context awareness, human interaction, and team adaptability—all of which are important characteristics in the notion of autonomous or semi-autonomous systems.

Challenges and Recommendations

The usual difficulties that arise while creating conceptual frameworks are as follows:

- Theories that are already there are not well-matched with each other.

- The relationships are more descriptive than analytical.

- No visual representation is available.

- Constructs and variables are not clearly linked.

Recommendations

- Progressively, refining iterations to be performed as your understanding improves (Lynch et al., 2025).

- Utilise visual modelling tools (such as UML, cause-effect loops, or Bayesian maps, for example). Align constructs directly with hypotheses and research methods.

- Reference validated frameworks like those by Samuel (2020), Arena et al. (2024), and Podo et al. (2024) to maintain conceptual integrity.

Conclusion

A robust theoretical framework transforms the writing of a computer science dissertation into a clear, theory-informed research activity rather than just a technical exercise. It defines its boundaries, clarifies the line of reasoning, and bridges the gap between the understanding of the theory and the applications of the computational techniques.

The scholarly research (Samuel, 2020) on AI-powered visualization, maintenance predicting models (Arena et al., 2024), and LLM visual identity evaluation techniques (Podo et al., 2024) are just a few examples that show how concept frameworks connect all the parts of the research—from algorithms to human awareness—through the logic pathway rather than by the chance pathway.

At last, the conceptual frameworks in computer science not only assist but also greatly improve and enrich the quality of the researchers’ systems, the meanings of their findings, and the quality of the communication with the academic world in terms of precision and conceptual depth.

References

Arena, S., Florian, E., Sgarbossa, F., Sølvsberg, E., & Zennaro, I. (2024). A conceptual framework for machine learning algorithm selection for predictive maintenance. Engineering Applications of Artificial Intelligence, 130, 108340. https://doi.org/10.1016/j.engappai.2024.108340

DARPA. (2016). DARPA Perspective on AI: The Three Waves of AI. Defence Advanced Research Projects Agency. https://www.darpa.mil/about-us/darpa-perspective-on-ai-three-waves

Grosz, B. J. (1996). Collaborative systems: AAAI-94 presidential address. AI Magazine, 17(2), 67–85. https://ojs.aaai.org/aimagazine/article/view/1234

Lynch, A., Ramjan, L., Glew, P., & Salamonson, Y. (2025). Embedding conceptual frameworks in postgraduate dissertations: Strategies for alignment and rigor. Journal of Higher Education Research and Practice, 19(2), 45–59

Podo, L., Ishmal, M., & Angelini, M. (2024). Toward a structured theoretical framework for the evaluation of generative AI-based visualisations. Sapienza University of Rome. https://github.com/lucapodo/evallm_llama2_70b.git

Samuel, J. (2020). Conceptual frameworks for big-data visualisation: Discussion on models, methods, and artificial intelligence for graphical representations of data. In Handbook of Research for Big Data Concepts and Techniques (pp. 1–31). Apple Academic Press. https://ssrn.com/abstract=3640058

Salawu, H., Shamsuddin, S. M., Bolatitio, A., & Masibo, P. (2023). Synthesising empirical insights through conceptual frameworks: A review across computing disciplines. Information and Software Technology, 159, 107112. https://doi.org/10.1016/j.infsof.2023.107112